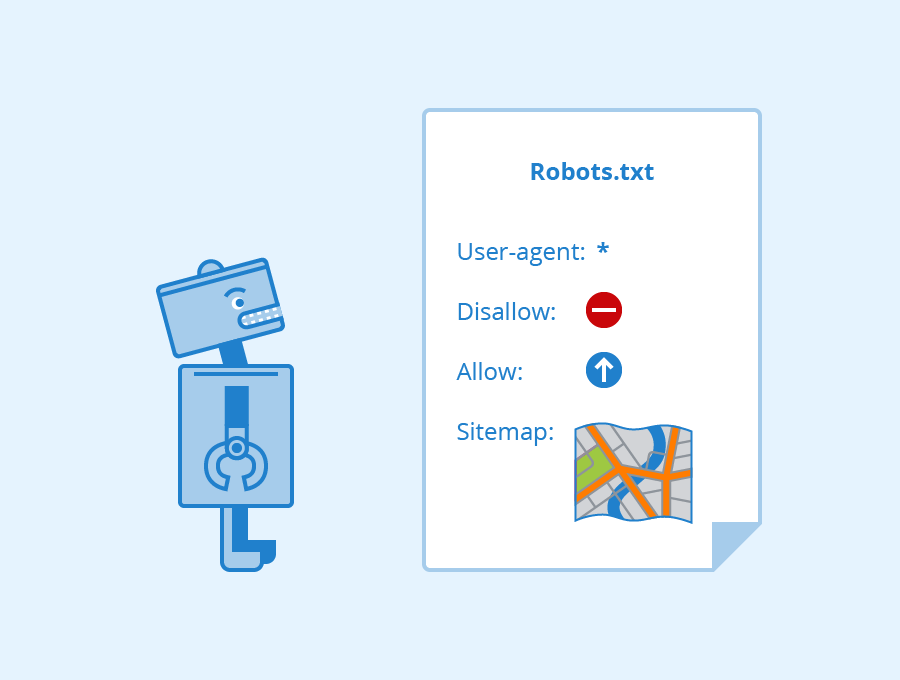

Robots.txt Optimization

- Efficient Crawling Control: Configure the robots.txt file to guide search engine bots on which parts of your website to crawl or ignore.

- Block Unnecessary Pages: Prevent the indexing of irrelevant or sensitive pages (e.g., admin areas, duplicate content) to optimize crawl budget.

- Allow High-Priority Areas: Ensure search engines can access and index the most valuable pages of your site.

- Syntax Accuracy: Verify and validate the robots.txt file to avoid errors that could inadvertently block important content.

- Regular Updates: Adjust robots.txt settings in response to website changes, ensuring ongoing SEO effectiveness.

- Use Cases:

- Managing e-commerce sites with complex URL structures.

- Restricting access to staging or development environments.

- Optimizing large websites with limited crawl budgets.

Reviews

There are no reviews yet.